Confidential AI

Overview

Run Large Language Models securely in GPU TEE for confidential AI.

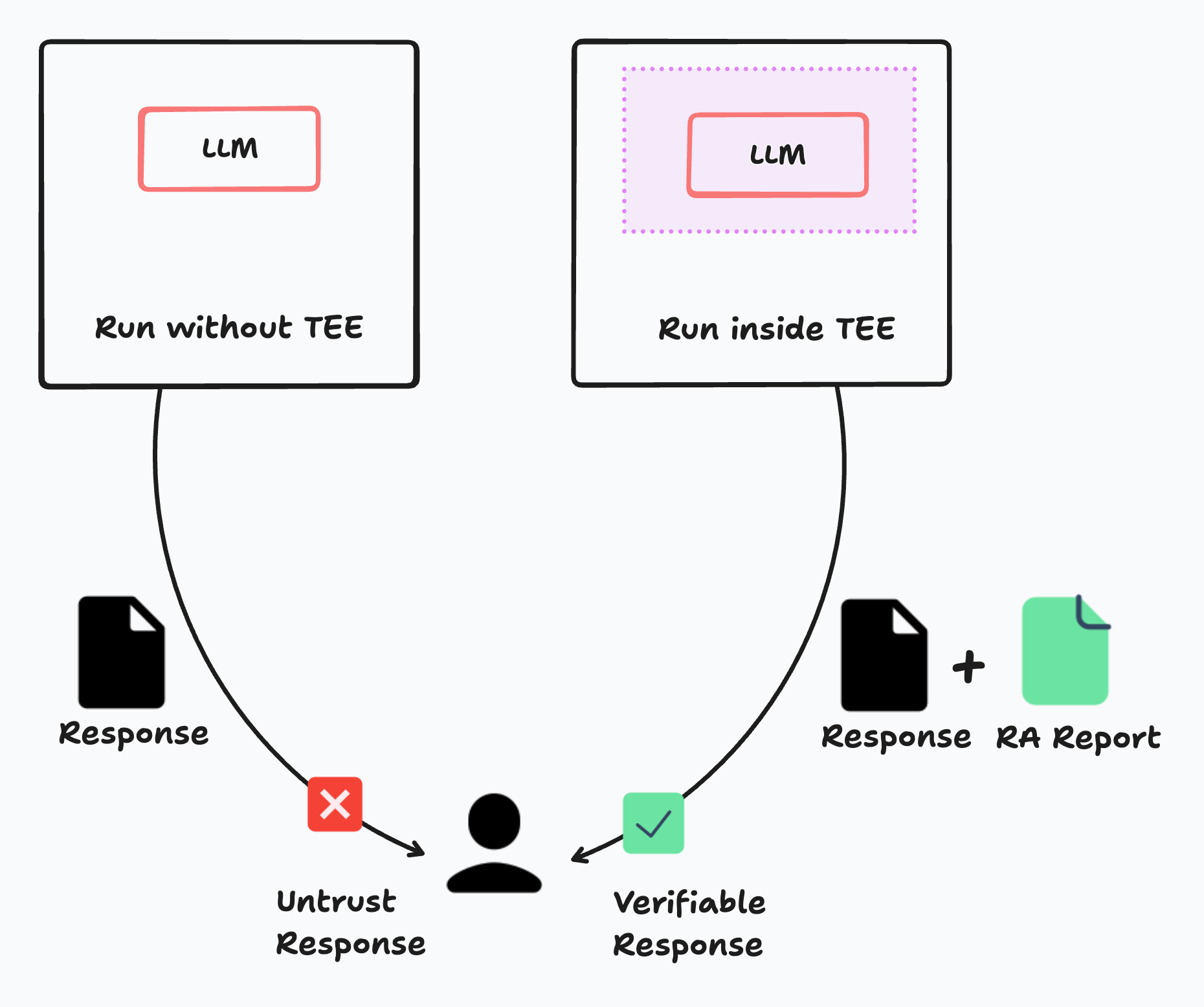

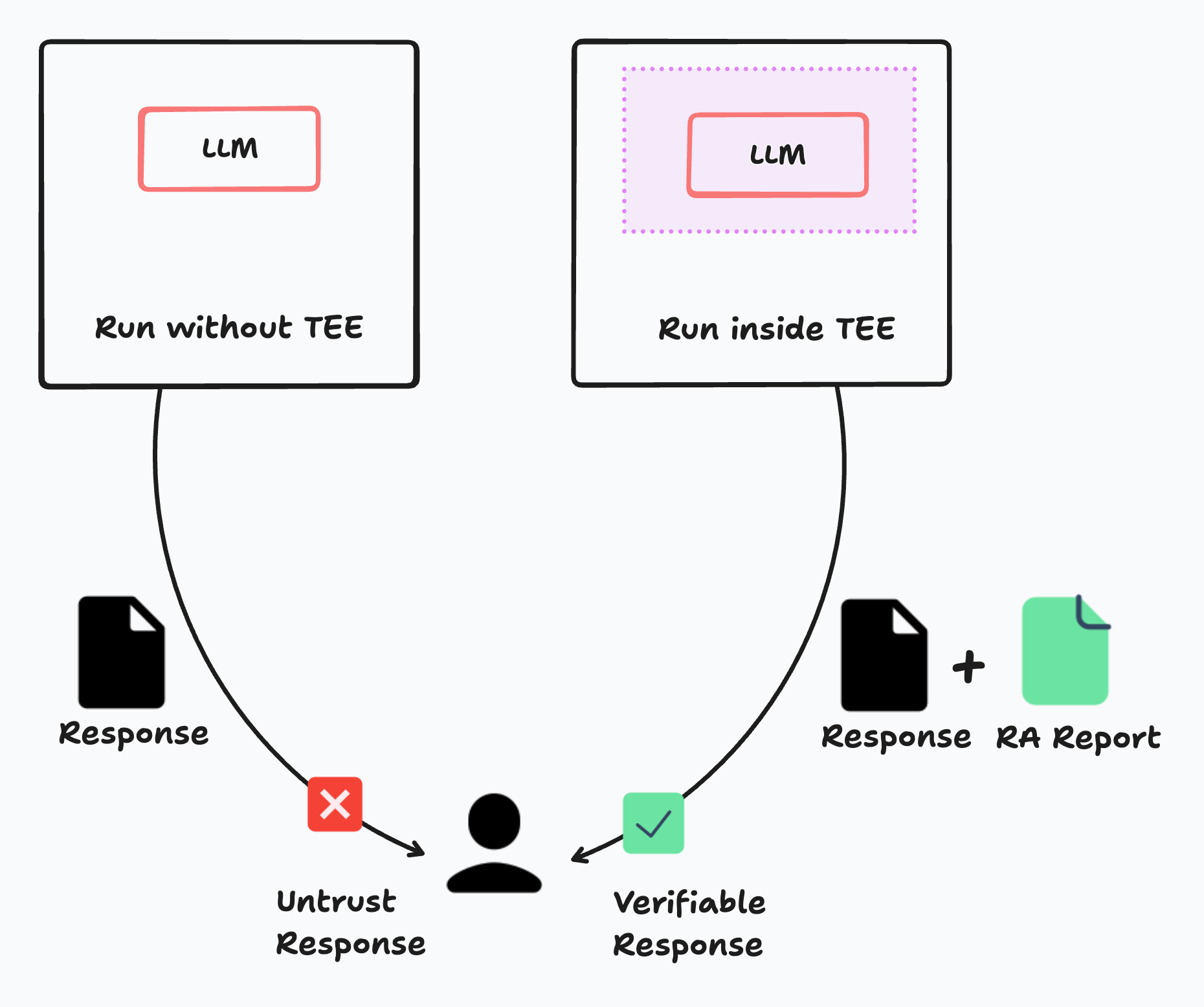

Private AI or called confidential AI addresses critical concerns such as data privacy, secure execution, and computation verifiability, making it indispensable for sensitive applications. As illustrated in the diagram below, people currently cannot fully trust the responses returned by LLMs from services like OpenAI or Meta, due to the lack of cryptographic verification. By running the LLM inside a TEE, we can add verification primitives alongside the returned response, known as a Remote Attestation (RA) Report. This allows users to verify the AI generation results locally without relying on any third parties.

Phala Cloud provides two major products to provide GPU TEE for confidential AI.

Confidential Models provide various models to provide hassle-free integration using its OpenAI-compatible format. Deploy seamlessly across cloud, on-premises, or edge environments.

And if you want to have GPU TEE server to serve your owen GPU service under the protection of TEE, you should choose Confidential GPU service.

Phala Cloud provides two major products to provide GPU TEE for confidential AI.

Confidential Models provide various models to provide hassle-free integration using its OpenAI-compatible format. Deploy seamlessly across cloud, on-premises, or edge environments.

And if you want to have GPU TEE server to serve your owen GPU service under the protection of TEE, you should choose Confidential GPU service.

Confidential Models

Run Large Language Models in GPU TEE

Confidential GPU

Rent GPU TEE Server to run your own model.

Open Source

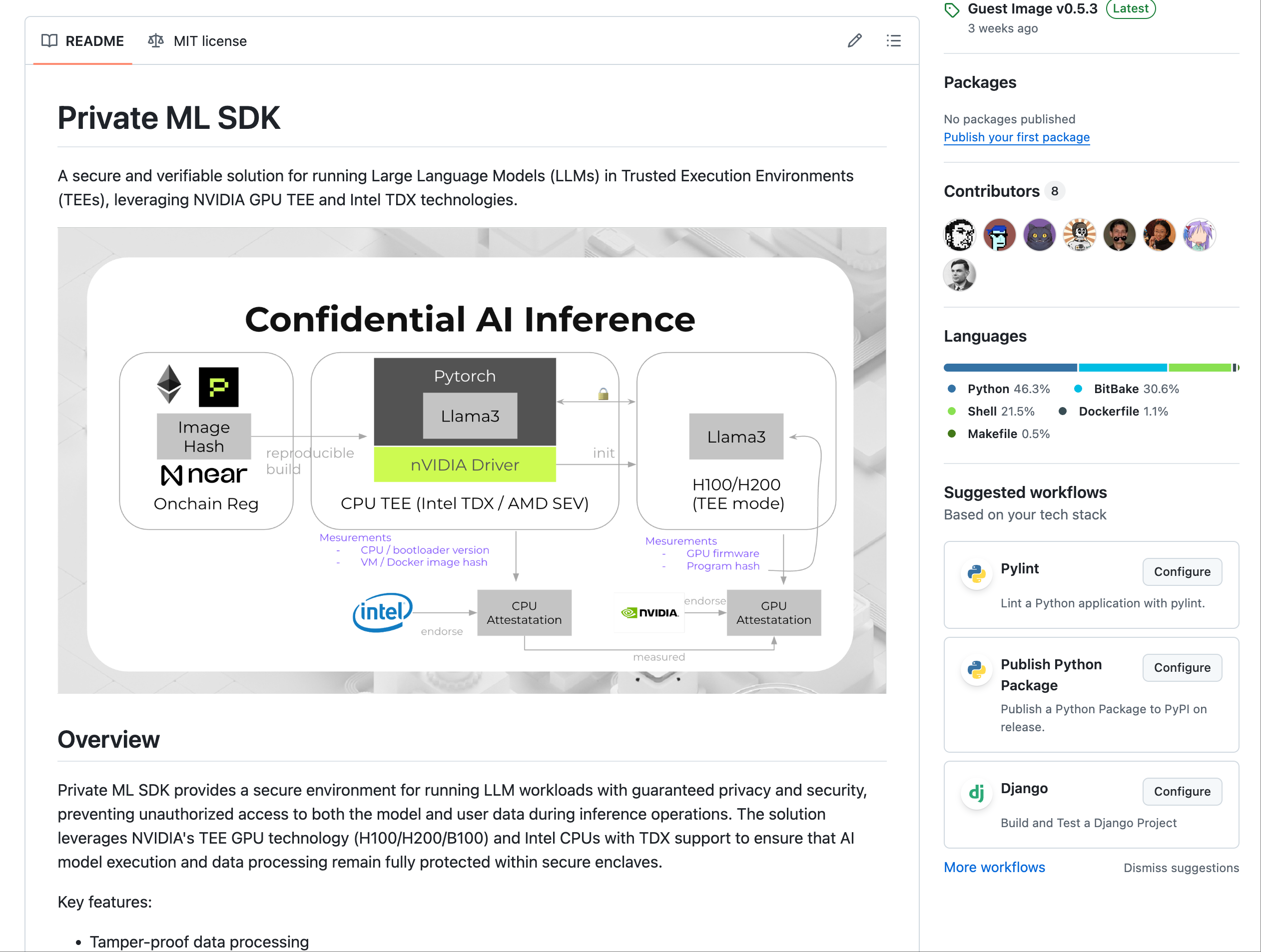

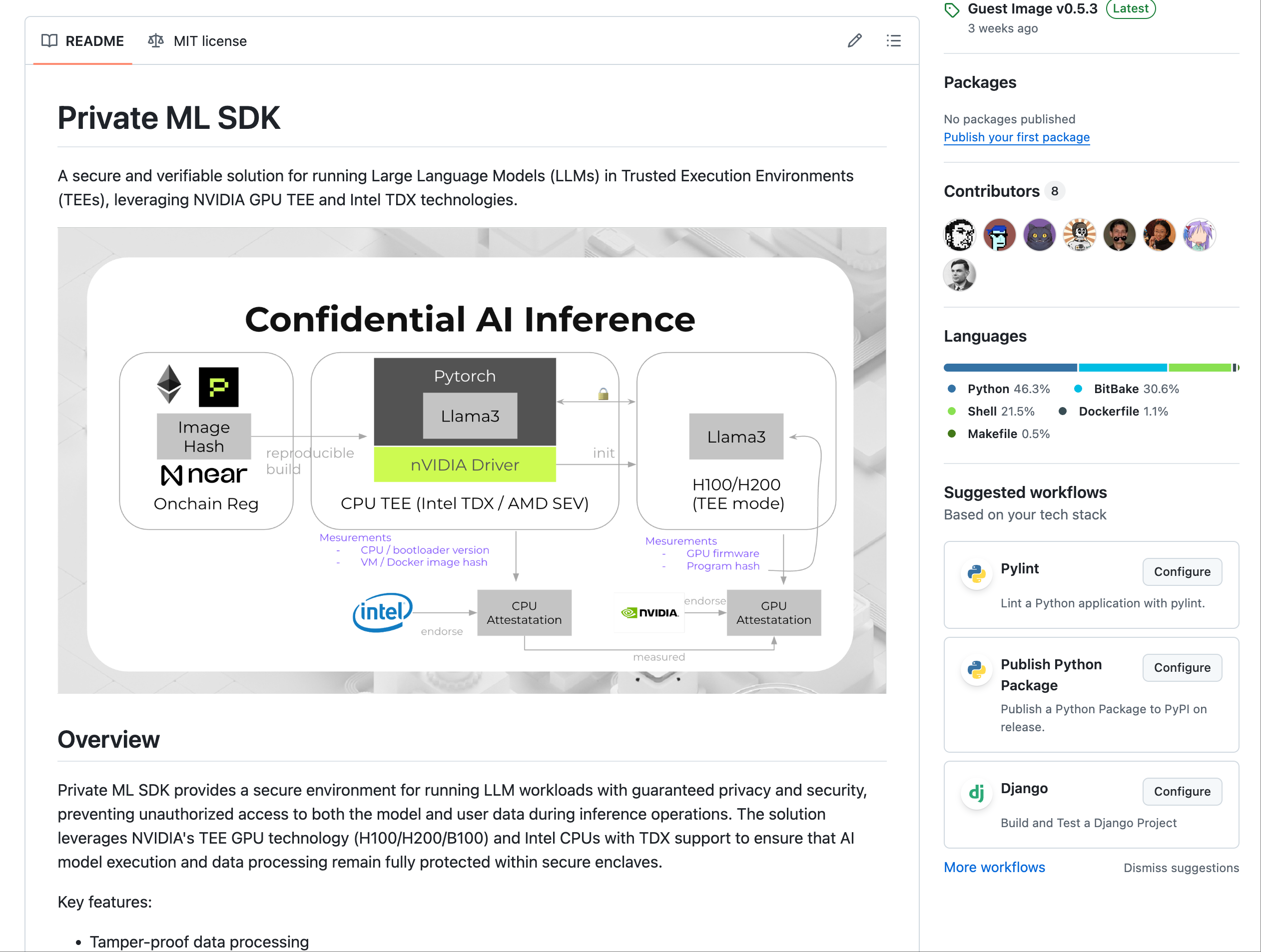

The implementation for running LLMs in GPU TEE is also available in the private-ml-sdk GitHub repository. This project is built by Phala Network and was made possible through a grant from NEARAI. The SDK provides the necessary tools and infrastructure to deploy and run LLMs securely within GPU TEE.